The AI Ecosystem Revolution: How Open-Source Models Drive Innovation

AI’s Open-Source Shift

The AI revolution is no longer confined to massive research labs—open-source models are reshaping the landscape. From independent developers fine-tuning models to enterprise-grade deployments, innovation is accelerating at an unprecedented pace.

Nebula Block bridges the gap between raw open-source potential and production-ready AI. It offers both high-performance GPU (e.g., H100, H200) instances and managed API endpoints optimized for fine-tuning large-scale models (e.g., LLaMA, Qwen). By reducing infrastructure costs, Nebula Block enables developers to scale AI applications without the burden of complex deployment. As demand for efficient AI computing grows, serverless architectures like Nebula Block’s are shaping the next generation of accessible model development.

Proprietary vs. Open AI

Historically, AI breakthroughs like Google's Gemini or OpenAI’s GPT models emerged from elite labs with massive resources. But today, projects like Meta's Llama 3, Mistral, and DeepSeek-R1 are open-sourcing state-of-the-art architectures. This changes everything:

- Democratized Experimentation: Startups and indie developers can fine-tune models for niche use cases (like medical diagnostics or legal analysis) without billion-dollar budgets.

- Community-Driven Progress: Open-source communities rapidly patch limitations, as seen when the Hugging Face community quantized Llama 2 to run efficiently on consumer hardware.

- Transparency & Trust: Enterprises hesitant about "black box" AI can inspect model weights, training data, and ethical guardrails.

Why This Matters

- Fine-Tuning Freedom

Open-source models arrive as "generalists". Need a specialized chatbot for your SaaS platform? Fine-tune Meta's Llama-3.3 or DeepSeek-R1-0528 on your proprietary data using Nebula’s NVIDIA H100 GPUs. Unlike API-locked alternatives, you retain full control over sensitive data. - Cost-Effective Scaling

Using quantization techniques like GPTQ or AWQ, you can shrink models to run efficiently on Nebula’s cost-optimized GPU instances ($0.52/hr for RTX 6000 Ada). For prototyping? Spin up an endpoint via our managed API in minutes. - The Rise of Composability

Build "AI Legos" by chaining open-source tools:

- Generate text with Llama 3.1

- Create images via Stable Diffusion XL

- Run RAG-enhanced searches using UAE-Large-V1 embeddings

All orchestrated through Nebula Block’s unified endpoints.

How Infrastructure Accelerates AI Innovation

Nebula Block simplifies open-source AI:

- Deploy Easily: Serverless APIs with vLLM deliver sub-100ms latency, handling 10,000+ requests/second.

- Scale Seamlessly: Kubernetes across 100+ data centers ensures compliance and capacity.

- Manage Data: S3-compatible storage supports RAG datasets.

These tools empower developers to innovate without infrastructure complexity.

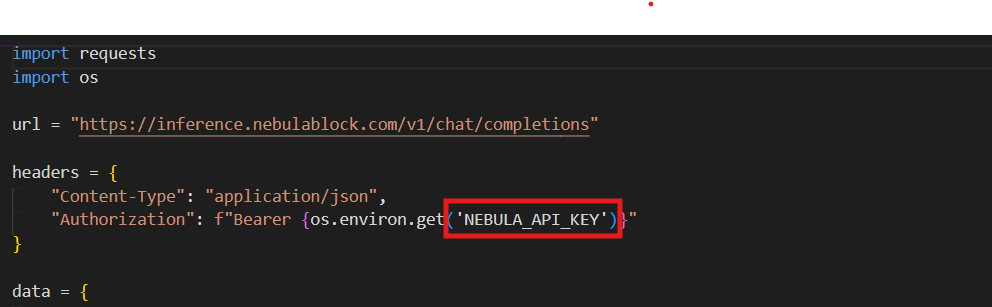

Here’s a simple example of querying a model through Nebula Block’s serverless framework:

import requests

import os

url = "https://inference.nebulablock.com/v1/chat/completions"

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {os.environ.get('NEBULA_API_KEY')}"

}

data = {

"messages":[

{"role":"user","content":"Is Montreal a thriving hub for the AI industry?"}

],

"model":"deepseek-ai/DeepSeek-R1-0528",

"max_tokens":None,

"temperature":1,

"top_p":0.9,

"stream":False

}

response = requests.post(url, headers=headers, json=data)

print(response.json())Important Reminder: Generate Your API Key and put it to the code for seamless demo execution

Challenges & The Road Ahead

While open-source AI is thriving, challenges remain:

- Quality Control: Not all community models meet robust evaluation standards.

- Fragmentation: With multiple model variants, consistency in deployment can be complex.

- Compute Costs: Large-scale model training still demands powerful GPU resources.

Nebula Block addresses these concerns by ensuring cost-efficient AI scaling, giving developers the flexibility to deploy state-of-the-art models without infrastructure roadblocks. Fragmentation becomes manageable when you can A/B test model variants across identical GPU configurations, while our pay-per-second billing prevents cost surprises during experimentation.

The Future: Open AI Without Limits

The future of AI belongs to decentralized innovation. As open-source models continue to evolve, the ability to fine-tune, adapt, and deploy AI seamlessly will define the next era of breakthroughs.

Nebula Block is committed to accelerating this transformation—where developers, startups, enterprises and researchers can build AI applications without restrictions.

Next Steps

Sign up and explore now.

🔍 Learn more: Visit our blog and documents for more insights or schedule a demo to optimize your search solutions.

📬 Get in touch: Join our Discord community for help or Contact Us.

Stay Connected

💻 Website: nebulablock.com

📖 Docs: docs.nebulablock.com

🐦 Twitter: @nebulablockdata

🐙 GitHub: Nebula-Block-Data

🎮 Discord: Join our Discord

✍️ Blog: Read our Blog

📚 Medium: Follow on Medium

🔗 LinkedIn: Connect on LinkedIn

▶️ YouTube: Subscribe on YouTube