Optimizing AI Search Engines with Sentence Embeddings

Introduction

AI search engines demand low-latency, context-aware results, but high compute costs and infrastructure complexity often hinder performance. Sentence embeddings, which transform text into semantic vectors, enable smarter search by understanding user intent.

Nebula Block’s serverless platform, powered by NVIDIA H100/H200 GPUs, delivers cost-efficient, scalable infrastructure, saving 30-70% lower than major cloud providers. Unlike traditional GPU clusters requiring manual management, Nebula Block simplifies embedding-based search. This blog explores how Nebula Block optimizes AI search engines with sentence embeddings, offering developers insights to build advanced solutions.

Insights into Sentence Embeddings for Search

Sentence embeddings convert text into 1024-dimensional vectors, enabling semantic search to match queries with relevant documents despite varied phrasing. Nebula Block’s platform, processes thousands of embeddings per second at sub-100ms latency. Kubernetes orchestration across 100+ data centers, including Canadian infrastructure for data sovereignty, ensures scalability and compliance. Pre-configured frameworks like PyTorch and HuggingFace Accelerate simplify deployment, while S3-compatible storage streamlines data management.

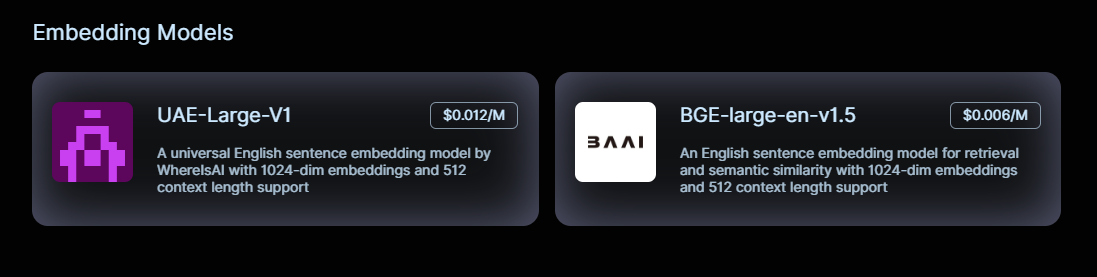

Two embedding models shine on Nebula Block:

- WhereIsAI/UAE-Large-V1: Optimized for semantic similarity. This model mitigates cosine saturation, making it ideal for retrieval-based search systems and question-answering applications, achieving 25% higher relevance than traditional methods.

- BAAI/bge-large-en-v1.5: Excelling in dense retrieval, this model ranks high on MTEB benchmarks. It enhances document retrieval, recommendation systems, and AI-driven knowledge bases.

How UAE-Large-V1 and BGE-large-en-v1.5 Improve Search

By integrating these models into Nebula Block’s scalable AI infrastructure, search engines can achieve:

- Semantic Matching: Retrieves contextually relevant results without exact keywords. Ex: Finds "Toronto apartments" even if the document says "condos in Ontario".

- Improved Ranking: Prioritizes results by meaning, not keyword frequency.

- Personalized Search: Adapts to user intent for more accurate recommendations.

- Efficiency: Vector comparisons (cosine similarity) are 100x faster than regex searches.

Model Showdown: UAE-Large-V1 vs. BGE-Large-EN-v1.5

| Model | Best For | MTEB Benchmark | Nebula Block Optimization |

|---|---|---|---|

| UAE-Large-V1 | Semantic similarity (Q&A, chatbots) | 85.3% on STS | 4x batch processing on H100 |

| BGE-Large-EN-v1.5 | Dense retrieval (enterprise search) | 1st place on MTEB | Low-latency deployments in Canada/EU |

Pro Tip: Use UAE for conversational apps, BGE for document-heavy workflows.

Why Nebula Block Excels for Embedding-Based Search

Nebula Block’s platform is tailored for AI search:

- Enterprise Hardware: Access H100, A100, and RTX 5090 GPUs without capital investment.

- Cost Efficiency: 30-70% savings make large-scale search affordable.

- Scalability: Kubernetes orchestration across global data centers handles peak query loads seamlessly.

- Low Latency: Sub-100ms inference ensures real-time user experiences.

- Ease of Use: Pre-configured frameworks and S3-compatible storage simplify workflows.

Conclusion & Next Steps

Nebula Block revolutionizes AI search engines by offering cost-efficient, scalable embedding solutions. Whether optimizing enterprise search or enhancing recommendation systems, its flexible pricing model and API-driven integration ensure seamless deployment.

Sign up and explore now.

🔍 Learn more: Visit our blog and documents for more insights or schedule a demo to optimize your search solutions.

📬 Get in touch: Join our Discord community for help or Contact Us.

Stay Connected

💻 Website: nebulablock.com

📖 Docs: docs.nebulablock.com

🐦 Twitter: @nebulablockdata

🐙 GitHub: Nebula-Block-Data

🎮 Discord: Join our Discord

✍️ Blog: Read our Blog

📚 Medium: Follow on Medium

🔗 LinkedIn: Connect on LinkedIn

▶️ YouTube: Subscribe on YouTube