Fine-Tune, Infer, Iterate: Claude-Sonnet-4 Hits Nebula Block for Builders

The Claude Sonnet 4 model from Anthropic has landed on Nebula Block—and it’s a big deal for builders. With a 200K context window, blazing-fast inference, and native tool use, Sonnet 4 is optimized for real-world AI workflows. Now, paired with Nebula Block’s per-second GPU billing and instant deployment stack, it’s never been easier to fine-tune, test, and scale Claude-based agents.

Why Claude Sonnet 4?

Claude Sonnet 4 is Anthropic’s mid-size model in the Claude 4 family—designed for high-volume, developer-centric tasks like:

- Code generation and refactoring

- Long-context document analysis (up to 200K tokens)

- Agentic workflows with native tool use and parallel function calls

- Vision and multimodal reasoning (in preview)

With support for sandboxed code execution, Claude Sonnet 4 is ideal for iterative, complex reasoning and production inference tasks.

API Pricing:

- $3 / million input tokens

- $15 / million output tokens

- Batch processing & prompt caching supported

Claude + Nebula Block = Builder’s Playground

With Claude Sonnet 4 now available on Nebula Block, developers can:

- Fine-tune on custom datasets using Nebula’s GPU clusters (A100/H100/H200)

- Deploy inference endpoints in seconds with container snapshots

- Run experiments without overpaying—thanks to per-second billing

- Scale up/down instantly with auto-clustered GPU orchestration

Whether you’re building a retrieval-augmented agent, a Claude-powered coding assistant, or a multi-modal chatbot, Nebula Block gives you the infrastructure to move fast and stay lean.

Quickstart: Using Claude Sonnet 4 on Nebula Block

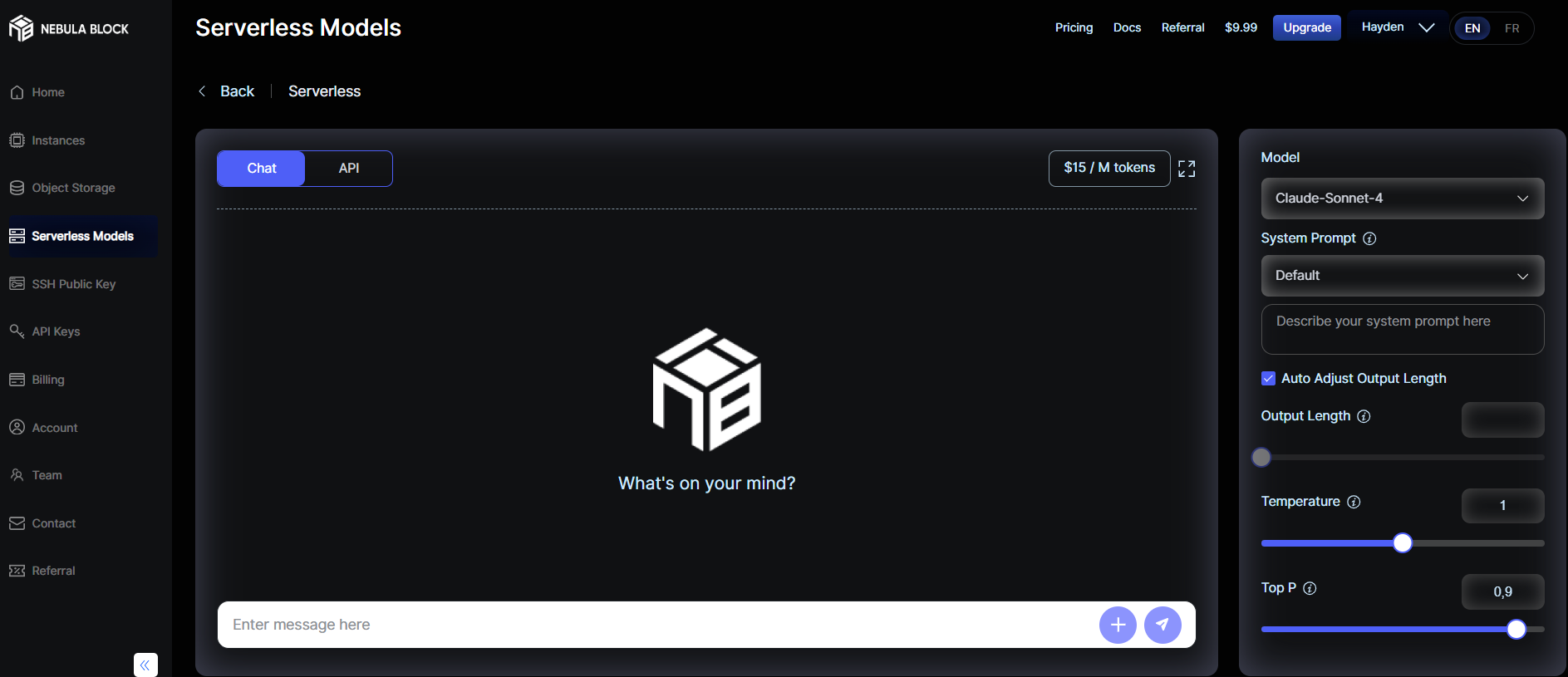

Option 1: Try in the Web UI (no code)

Visit Nebula Block's serverless models:

- Select the

Claude-Sonnet-4 - Paste your prompt into the input box (system + user prompts supported)

- Click Send → Get instant results

- Monitor the output length, temperature, etc., in the box on the right.

Option 2: Call the API (OpenAI-compatible format)

Use Claude Sonnet API endpoint:

import requests

import os

url = "https://inference.nebulablock.com/v1/chat/completions"

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {os.environ.get('NEBULA_API_KEY')}"

}

data = {

"messages":[

{"role":"user","content":"Is Montreal a thriving hub for the AI industry?"}

],

"model":"anthropic/claude-sonnet-4-20250514",

"max_tokens":None,

"temperature":1,

"top_p":0.9,

"stream":False

}

response = requests.post(url, headers=headers, json=data)

print(response.json())🔐 Replace NEBULA_API_KEY with your actual API key.Example Use Case: Claude Agent with Tool Use

- Iterative QA systems with 100K+ token history

- Financial summarizers for reports, filings, and disclosures

- Fine-tuning experiments for instruction-following or domain-specific tasks

- Memory-based agents using extended context + retrieval-augmented generation (RAG)

💡 Want to launch now? Use the Search for GPU resources feature to provision Claude-ready infrastructure instantly.

Ready to Build?

Adding Claude Sonnet models to Nebula Block's platform marks another step toward democratizing access to advanced AI capabilities. Every builder, regardless of team size or budget, can now leverage sophisticated language models to create exceptional user experiences.

The cycle of fine-tuning, inference, and iteration has never been more accessible or cost-effective. The question isn't whether you can afford to experiment with advanced models—it's what you'll build when the barriers are removed.

Ready to Explore?

Sign up and explore now.

🔍 Learn more: Visit our blog and documents for more insights or schedule a demo to optimize your search solutions.

📬 Get in touch: Join our Discord community for help or Contact Us.

Stay Connected

💻 Website: nebulablock.com

📖 Docs: docs.nebulablock.com

🐦 Twitter: @nebulablockdata

🐙 GitHub: Nebula-Block-Data

🎮 Discord: Join our Discord

✍️ Blog: Read our Blog

📚 Medium: Follow on Medium

🔗 LinkedIn: Connect on LinkedIn

▶️ YouTube: Subscribe on YouTube