Embedding Models vs. Traditional NLP Approaches: A Shift in AI Efficiency

Natural Language Processing (NLP) has undergone significant transformations over the years, with a key transition from traditional statistical methods to embedding-based models. This shift has dramatically improved AI efficiency, enabling more sophisticated language understanding, contextual analysis, and downstream task performance. Let's explore the differences between these approaches and why embedding models have become the preferred choice.

Traditional NLP Approaches: Rule-Based & Statistical Methods

Before embedding models became prevalent, NLP relied on techniques such as:

- Bag-of-Words (BoW) and TF-IDF: Represented text as sparse matrices based on word occurrence, ignoring semantic relationships.

- N-grams and Probabilistic Models: Used fixed-length sequences of words for syntactic analysis but struggled with long-range dependencies.

- Part-of-Speech Tagging & Parsing: Relied on handcrafted rules, dictionaries, and syntactic structures.

- Machine Learning Models (e.g., Naïve Bayes, SVMs): Used feature engineering on structured text but lacked deep semantic representation.

These methods were computationally expensive, required significant domain knowledge, and struggled with ambiguity, contextuality, and scale.

The Emergence of Embedding Models

Embedding models revolutionized NLP by introducing continuous, dense vector representations that capture semantic meaning. Key innovations include:

- Word Embeddings (Word2Vec, GloVe, FastText): Represent words in vector space, preserving semantic relationships through similarity.

- Contextual Embeddings (ELMo, BERT, GPT series): Utilize deep learning techniques like transformers to generate word representations dependent on surrounding words.

- Sentence and Document Embeddings (Universal Sentence Encoder, Transformer-based architectures): Extend embeddings beyond words for improved contextual representation.

These models significantly outperform traditional approaches in sentiment analysis, translation, summarization, and conversational AI by leveraging vast amounts of training data.

Comparing Efficiency

- Enhanced Semantic Understanding: Embeddings capture context better than rule-based systems, leading to improved language comprehension.

- Reduced Need for Manual Feature Engineering: Unlike traditional models, embeddings learn representations directly from raw text.

- Scalability & Adaptability: Pre-trained embeddings generalize across multiple NLP tasks and domains without extensive retraining.

- Improved Performance in Low-Resource Languages: Transfer learning allows embeddings to adapt to languages with limited data.

- Real-Time Processing & Lower Latency: Neural-based embeddings provide faster inference for tasks like chatbots and search engines.

Insights and Industry Impact

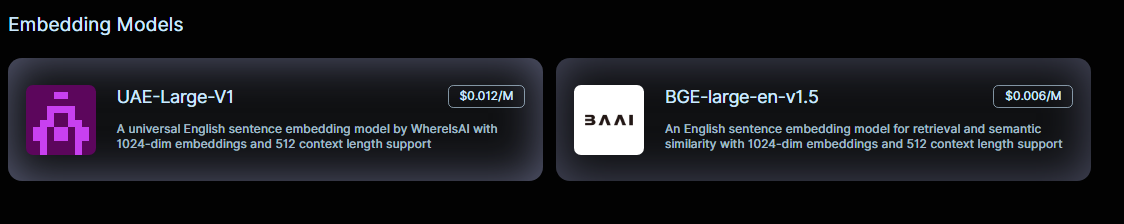

- Efficiency Leap: Embedding models reduce development time by eliminating manual feature engineering, offering higher accuracy for complex tasks like semantic search. Nebula Block’s platform makes these models accessible to startups and enterprises by leveraging cost-efficient GPU infrastructure.

- High-Throughput Optimization: Nebula Block’s serverless platform excels in handling high-throughput AI workloads, such as real-time analytics for smart cities or fintech, by combining advanced GPUs with techniques like key-value caching. This ensures robust performance under heavy demand, setting it apart in scalable AI deployment.

- Challenges: Embeddings require significant training resources and can inherit data biases. Future advancements may focus on compact models and edge AI, areas where Nebula Block’s innovations, like Nemotron Nano VL, show promise.

- Industry Trends (June 2025): Recent investments, like Amazon’s $20 billion data center expansion and xAI’s $5 billion debt raise, reflect growing demand for GPU-accelerated AI infrastructure. Taiwan’s $51.74 billion AI component exports in May 2025 underscore GPU reliance, aligning with Nebula Block’s capabilities.

Conclusion

Embedding models mark a shift from traditional NLP, offering unmatched semantic understanding and scalability. Nebula Block’s serverless GPU platform amplifies this by delivering low-latency, cost-efficient AI solutions, driving innovation in real-time analytics and beyond.

Next Steps

Sign up and explore now.

🔍 Learn more: Visit our blog and documents for more insights or schedule a demo to optimize your search solutions.

📬 Get in touch: Join our Discord community for help or Contact Us.

Stay Connected

💻 Website: nebulablock.com

📖 Docs: docs.nebulablock.com

🐦 Twitter: @nebulablockdata

🐙 GitHub: Nebula-Block-Data

🎮 Discord: Join our Discord

✍️ Blog: Read our Blog

📚 Medium: Follow on Medium

🔗 LinkedIn: Connect on LinkedIn

▶️ YouTube: Subscribe on YouTube