Canada Opens $300M AI Compute Fund — Here’s How to Apply

Canada has officially opened applications for the AI Compute Access Fund, a bold initiative under its $2 billion Canadian Sovereign AI Compute Strategy. With up to CAD $300 million allocated, this program is designed to eliminate one of the biggest roadblocks in AI development: affordable access to GPU infrastructure.

Whether you’re fine-tuning a foundation model, deploying an LLM API, or building agentic AI systems — this fund could help you access world-class compute without the world-class burn rate.

What Is the AI Compute Access Fund?

The AI Compute Access Fund provides direct financial support to cover up to 2/3 of the costs of eligible Canadian compute workloads, including:

✅ Model training and inference on GPUs

✅ RAG and agentic pipelines

✅ Fine-tuning and experimentation

Who’s Eligible?

To qualify, you must meet all of the following criteria:

- Must be a Canadian-registered, for-profit company developing AI products

- Or part of a consortium led by a corporation or limited liability partnership

- Fewer than 500 full-time employees (FTEs)

- R&D team based in Canada; activities must be carried out domestically

- Must have revenue generation or Series A funding-level investor interest

- Must provide a signed compute service agreement or equivalent documentation at time of application

How much can you get?

- Up to CAD $300M to offset AI compute (Budget 2024)

- Eligible reimbursment size: $100 K – $5 M

- Reimburses: 66.7 % of Canadians compute for three years, 50 % of foreign compute for two years

- Deadline to apply: July 31, 2025

Why This Fund Matters

For years, Canadian startups and researchers have faced a critical bottleneck: access to affordable, scalable compute. The new fund directly addresses this challenge by:

- Leveling the playing field: You don’t need to raise millions to access GPUs like A100s or H100s anymore.

- Retaining talent: More AI builders can now stay in Canada, instead of migrating to Silicon Valley for resources.

- Accelerating time-to-impact: With easier access to infrastructure, teams can go from research to real-world deployment faster.

Why Nebula Block Wins

Nebula Block is ideally positioned to help eligible teams deploy their projects in compliance with the fund’s requirements.

Here’s how we help:

✅ Canadian GPU Infrastructure

Data centers in Canada — qualifies for full 66.7% cost share, eligible until March 2028.

✅ Compliance & Sovereignty

Fully Canadian-hosted: meets LAW 25, PIPEDA, PHIPA. Not subject to U.S. CLOUD Act (unlike AWS/GCP).

✅Certified AI Provider under ISED

Eligible for SR&ED, NRC‑IRAP, and other Canadian grant stacking.

✅ Flexible Compute & Billing

Serverless inferences (pay-by-token) or Instances with On-demand and Reserved GPU (up to 40% savings) for LLMs and fine-tuning.

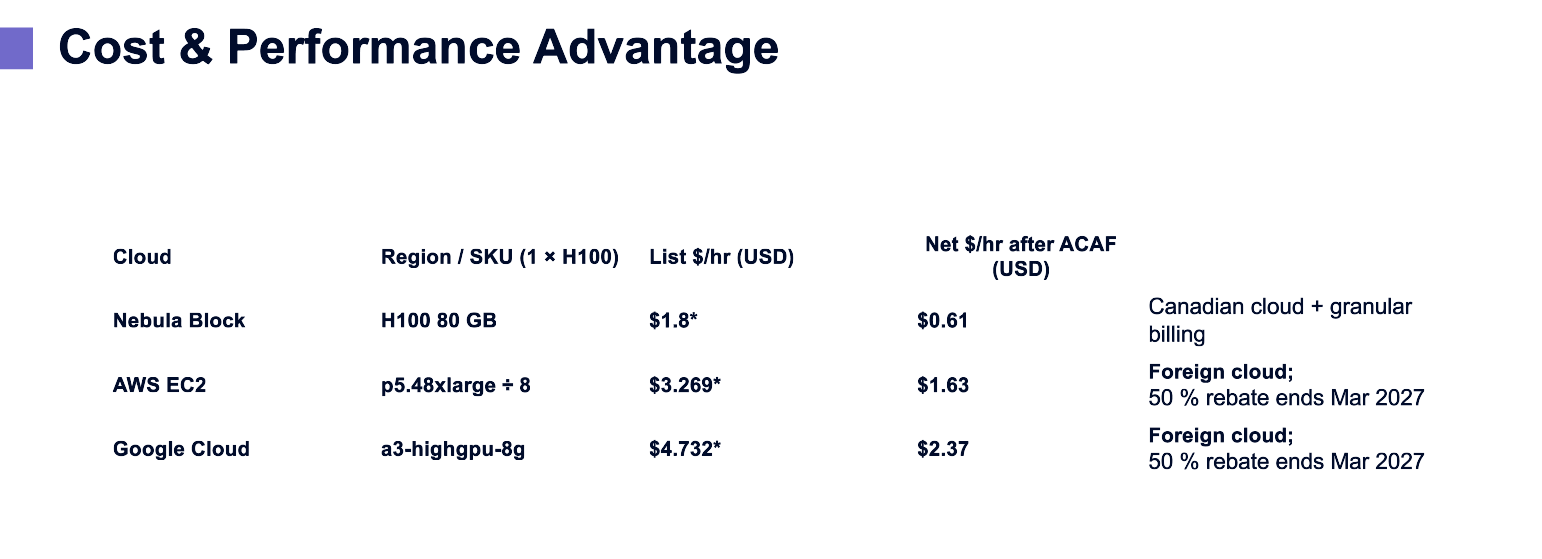

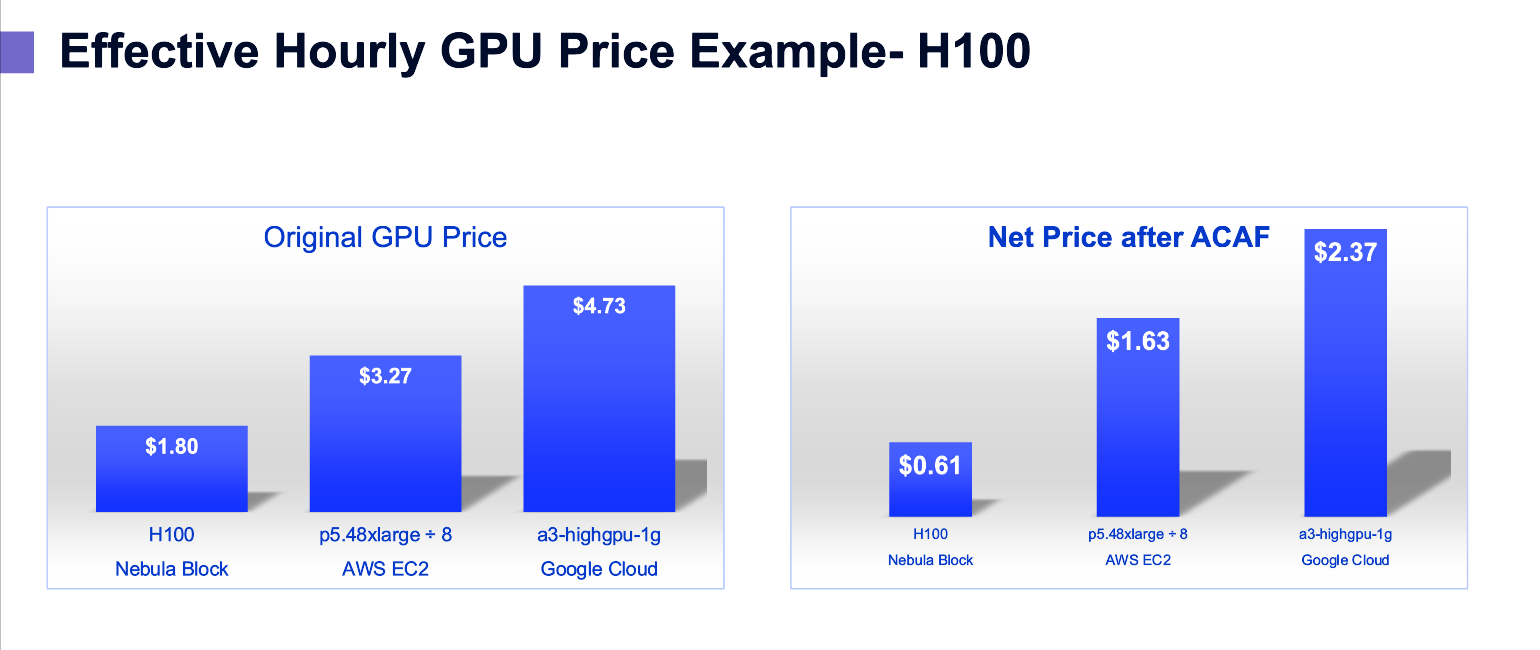

✅ Lowest Effective Price

Example: H100 at $0.61/hr effective, cheaper than AWS and Google Cloud — even after their 50% rebates (ending 2027).

✅ OpenAI-Compatible APIs

Serve your model via a simple, scalable API endpoint — no Docker, streamlined DevOps, no infra required.

✅ Confidential AI Workloads

Supports confidential computing on NVIDIA H100 and H200 — ideal for sensitive data or regulated projects.

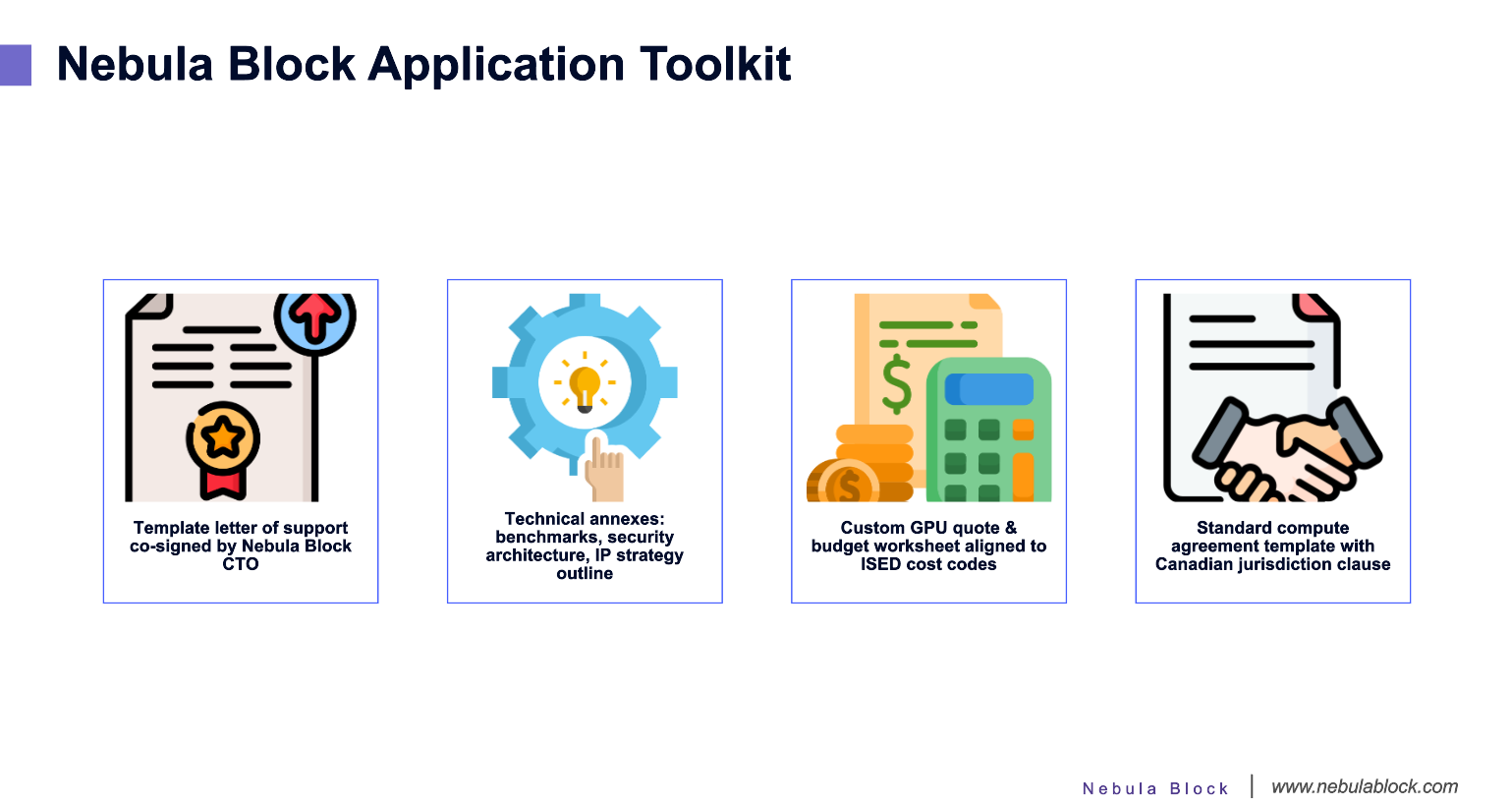

✅ Purpose-Built Toolkit for ISED Applications

Standard compute agreement template includes Canadian jurisdiction clause, ISED-aligned budget sheet, and CTO-signed support letter.

✅ Fast Setup, No Vendor Lock-in

Start in minutes. Pay as you go. Switch providers anytime.

Quick View: Nebula Block vs. Traditional GPU Providers for Canadian AI Teams

Feature / Capability | Nebula Block | Traditional Providers |

|---|---|---|

Canadian-based GPU Infrastructure | Yes — A100, H100, H200, RTX instances in Canada | Often hosted outside Canada (e.g., US regions) |

Data Sovereignty Compliance | Fully compliant with LAW 25, PIPEDA, PHIPA | Not guaranteed |

Pricing Model | Granular, usage-based pricing — $0.61/hr H100 (after 66% ACAF) or serverless inference (pay-per-token) | Fixed pricing — $1.63–$2.37/hr H100 (with temporary 50% rebate, ends 2027) |

API Access | OpenAI-compatible, plug & play | Complex DevOps required |

Storage Integration | Integrated, encrypted, S3-compatible storage | Region limited or separate (must set up, configure, and manage manually) |

Time to Deploy | Minutes — from upload to inference | Hours to days |

CAF Alignment (AI Compute Fund) | Eligible for 66.7% cost share until Mar 2028 | Max 50% share for non-Canadian clouds in 2 years |

✅ Conclusion: If you’re applying for the AI Compute Access Fund, Nebula Block offers developer-friendly infrastructure that supports eligible Canadian workloads — with GPUs, APIs unified in a single scalable platform.

💡 Final Thought

Canada’s AI Compute Access Fund is more than just a subsidy — it’s a signal. A commitment to local innovation, open research, and accessible infrastructure.

If you’re building in Canada, this is your chance to unlock high-performance compute without the overhead.

Next Steps

- Execute SOI (Nebula assists with LOI)

- Receive Nebula Block quote & draft agreement

- Submit your statement of interest (SOI) by July 22nd

- Apply by July 31, 2025 — and let Nebula Block be your trusted infrastructure partner on the road to production.

Sign up and explore now.

🔍 Learn more: Visit our blog and documents for more insights or schedule a demo to optimize your search solutions.

📬 Get in touch: Join our Discord community for help or Contact Us.

Stay Connected

💻 Website: nebulablock.com

📖 Docs: docs.nebulablock.com

🐦 Twitter: @nebulablockdata

🐙 GitHub: Nebula-Block-Data

🎮 Discord: Join our Discord

✍️ Blog: Read our Blog

📚 Medium: Follow on Medium

🔗 LinkedIn: Connect on LinkedIn

▶️ YouTube: Subscribe on YouTube