Reserved GPU for LLM APIs — Optimize Cost for High Volume Traffic

Running inference APIs, fine-tuning pipelines, or agentic systems at scale? If your workload is always-on, then on-demand GPU pricing can quickly become your biggest bottleneck.

Reserved GPU Instances — now live on Nebula Block — help you lock in guaranteed compute at up to 40% less than standard pricing.

What Is a Reserved Instance?

A Reserved Instance (RI) is a long-term commitment to a specific GPU resource (A100, H100, 4090, etc.) on Nebula Block. In exchange for reserving capacity over a fixed term, you gain substantial cost savings and dedicated performance — ideal for scaling AI workloads with predictability.

Why Use Reserved GPUs?

Reserved GPUs are ideal for teams with stable or sustained workloads, especially those building:

- LLM APIs & inference endpoints that run 24/7

- RAG or agentic pipelines with high query volume

- Fine-tuning jobs that require continuous access to high-performance GPUs

- Enterprise AI platforms with strict compliance or performance SLAs

- Hybrid cloud deployments that need consistent base load performance

Key Benefits

| Feature | Benefit |

|---|---|

| Up to 40% Savings | Commit to a 1–3 month term and save significantly vs. on-demand pricing |

| Guaranteed Capacity | Get dedicated access to high-demand GPUs like A100, H100, 4090 |

| No Idle Waste | Run long jobs or APIs without worrying about instance interruption |

| Better Planning | Perfect for teams with predictable workloads or budget targets |

| Flexible Pairing | Mix with serverless GPUs for burst workloads or hybrid deployments |

Use Cases

- Hosting production LLM APIs (OpenAI-compatible)

- Running RAG pipelines with consistent traffic

- Continuous fine-tuning or LoRA training jobs

- Internal tools or dashboards requiring GPU backend

- Multi-user GPU tenancy across teams

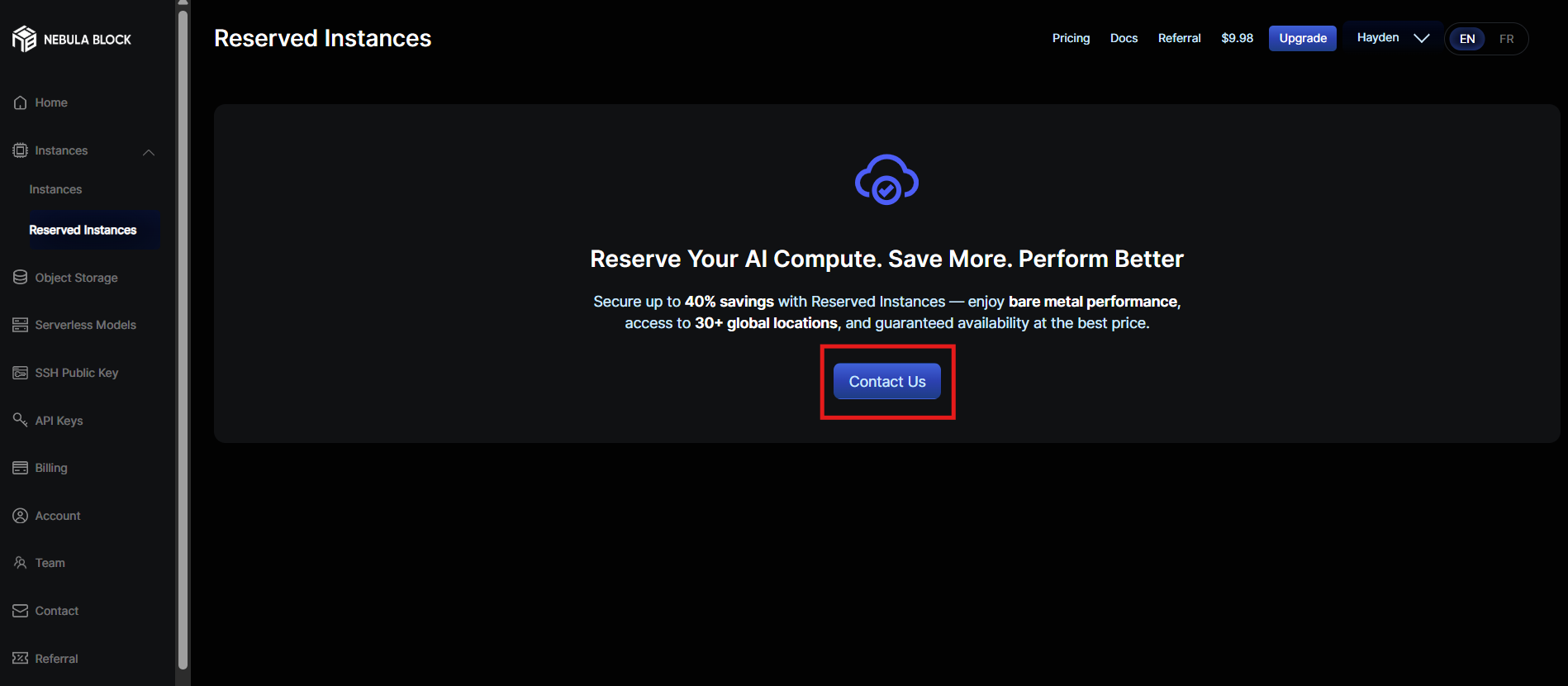

How to Reserve a GPU on Nebula Block?

Because every workload is different, we offer personalized Reserved Instances via direct engagement — ensuring the best fit for your compute needs.

Reserved Instances are only available via direct engagement. This allows our team to:

- You get the best-fit GPU type and region

- We align with your capacity needs and timeline

- You understand the contract length and discount tiers

👉 Ready to reserve?

- Visit: Reserved Instances

- Submit your request via contact form — we’ll help you lock in the right GPUs for your AI workloads.

Who Should Consider Reserved Instances?

- Teams deploying LLM APIs or inference backends in production

- Researchers running daily fine-tuning or experimentation

- Startups building stable RAG or agentic systems

- Enterprises with predictable monthly compute spend

Final Thoughts

If you're scaling LLM workloads and want to cut compute costs without sacrificing performance, Reserved GPU Instances on Nebula Block are the strategic move.

With guaranteed access, simplified billing, and up to 40% off — it’s everything your AI infrastructure needs to scale efficiently.

Next Steps

Sign up and explore now.

🔍 Learn more: Visit our blog and documents for more insights or schedule a demo to optimize your search solutions.

📬 Get in touch: Join our Discord community for help or Contact Us.

Stay Connected

💻 Website: nebulablock.com

📖 Docs: docs.nebulablock.com

🐦 Twitter: @nebulablockdata

🐙 GitHub: Nebula-Block-Data

🎮 Discord: Join our Discord

✍️ Blog: Read our Blog

📚 Medium: Follow on Medium

🔗 LinkedIn: Connect on LinkedIn

▶️ YouTube: Subscribe on YouTube